How AI is Changing Sales Conversations

I founded my first conversational AI company in 2011. Back then, AI tended to be based on handcrafted rules expressed in code. The development of machine learning (ML) techniques helped improve speech recognition and natural language understanding tasks, but a conversation with a virtual character still had to be scripted by hand. That is, a human would have to try to anticipate everything a user might say at each point in a conversation and write a response for that input. As a result, these chat experiences would often feel limited and could easily go off the rails.

Today, the term AI tends to mean the use of a large language model (LLM) like ChatGPT, Claude, Gemini, or Llama. LLMs let us create much more realistic and engaging conversations with a virtual character, and they also make voice-based interactions more natural. In this article, I will cover why LLMs have enabled an entirely new generation of sales training and coaching platforms like SalesMagic.

Language Generation

Text-based LLMs excel at generating language. At its core, an LLM simply picks the next probable word in a sentence based on the massive amount of data it's been trained on. The amazing thing is that this ends up producing coherent and grammatically correct dialogue, something that I spent a lot of time at Apple writing code to achieve only a few years ago. As a result, AI applications that are based on creating language are a perfect match for this technology. This is why you see a lot of companies offering AI-based tools that rewrite, summarize, or generate text for you.

By contrast, if you want to search for factually correct information, such as telling your customers about actual company policies or correctly citing case law in legal briefs, then a pure LLM is often not the right tool for the job because it can make up facts; something that's known in the field as hallucinating.

Hallucinations

Hallucination is the term used to describe when an LLM generates false information. AI companies talk about their LLMs hallucinating at a certain rate, such as 3% or 15%. But in reality, an LLM hallucinates 100% of the time: it has no inherent understanding of facts and is just making up language by picking words based on probabilities. It's just that a lot of the time it lucks out and the answers seem reasonable or correct to us.

A lot of effort has been applied recently to try to minimize hallucinations. This topic is too involved to cover fully here, but some example techniques include:

-

Reinforcement Learning from Human Feedback (RLHF): this is where human annotators evaluate and refine the quality of the LLM's responses. OpenAI hired dozens of contractors to improve ChatGPT in this way.

-

Retrieval-Augmented Generation (RAG) lets an LLM look up precise information at runtime, e.g., by making an API call to a database or search index. In effect, this produces a hybrid system that combines the strengths of generative AI and retrieval-based techniques, and allows the system to access accurate up-to-date information.

-

Chain-of-thought (COT) techniques break down a large problem into smaller more manageable parts. This allows the model to solve complex tasks as well as provide more accurate and transparent reasoning.

Applicability to Sales Training

The above benefits and limitations of modern AI technologies make them a great fit for conversational AI applications, such as practicing sales calls with virtual prospects.

The ability to generate believable language means that conversational AI can be scaled to millions of users over thousands of specialties without the need to manually plan and write every response. And without the need to manually train machine learning systems with labelled data for different domains. This means that LLM-driven conversations can handle unexpected inputs much more robustly and the experience of conversing with one of these virtual prospects is markedly more impressive than the experience of talking to a chatbot only 5-10 years ago.

Also, the possibility of hallucinations is less of a concern for sales training applications because we're not using AI to answer factual questions or solve math equations. We're trying to simulate human-level conversations, and humans don't always know the right answer or say the right thing. In effect, humans hallucinate too; we just call it something else, such as being forgetful or telling stories.

That said, some of the techniques to address hallucinations can further improve sales training platforms. For example, RAG techniques can be used to let the LLM look up accurate company or product details for your specific scenarios during a conversation. Or they can enable coaching features that look up all your sales calls and give you advice on areas of improvement based on all your saved call data.

Voice Interactions

So far, I've only been talking about text-based interactions with an LLM, such as a text messaging experience. However, LLMs have also revolutionized other technologies that support natural voice-based conversations.

For example, modern LLM-based speech synthesis systems can now produce much more realistic sounding voices with more natural emotional ranges. And these can be trained on relatively tiny amounts of data, such as a few seconds of audio. Pair this with high-accuracy speech recognition services, and it becomes possible today to create interactive voice-based conversations with virtual characters that can finally cross the uncanny valley.

Voice Pipeline

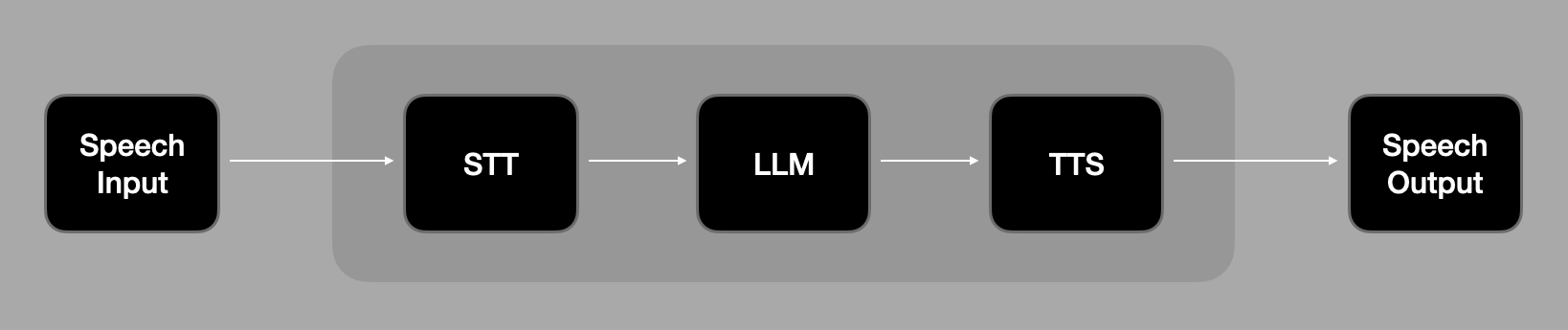

An AI-driven conversational experience today normally consists of the following pipeline of operations:

- Speech To Text (STT): converts the audio captured from your device's microphone into text.

- Large Language Model (LLM): takes the text from STT and produces a text response for the virtual prospect.

- Text To Speech (TTS): converts the text from the LLM into audio, with support for different voices and emotions.

For snappy-feeling conversations, it's important that all these services are fast and can stream their results incrementally. For example, at SalesMagic, we use Groq (different from Grok) as our primary LLM service because it's blazingly fast; at least twice as fast as other services we've tried. We are constantly tracking Time To First Token (TTFT) or Time To First Byte (TTFB) metrics to try to reduce conversational latency!

There are also several other techniques that have gotten substantially better in recent years due to AI models that can really sell the experience of a natural voice interaction. These include noise cancellation and turn detection.

Noise Cancellation

Noise cancellation means removing background noise from the audio input. This can include the echo of the virtual prospect's voice through your speakers (echo cancellation) as well as other people speaking in your environment (background voice cancellation).

Back in 2013, we developed conversational experiences that let users speak to virtual characters on their phones or tablets. One thing we learned quickly is that users often talk to their devices in very noisy environments, such as with the TV on, with vacuum cleaners or fans on, or other people talking in the background.

The ability to remove these background noises and voices is critical to ensuring that the speech-to-text system can accurately hear what you, and only you, say. So, AI-powered noise cancellation technologies, such as Krisp, are really important.

Turn Detection

Another critical aspect of holding a conversation is turn taking, i.e., knowing when you're done talking so that the virtual prospect can start responding. The traditional way to do this is with a technique known as Voice Activity Detection (VAD), which determines if an audio signal includes human speech or not. For example, if the system detects that the user has not spoken for, say 0.8 seconds, then it will assume that you've stopped talking and it's time for the virtual prospect to respond.

However, this doesn't work very well in practice because humans can pause for various reasons during normal speech, e.g., "let me think about that for a second...". A better solution that has become possible recently is to use an AI model that determines if you've finished your current thought based on what you've just said. This can help to figure out if you're still mid-sentence, or if you've completed your thought or question. For SalesMagic, we use LiveKit and their model-based approach for turn detection, which is also used by ChatGPT's voice mode. This has made a tremendous difference to the naturalness of SalesMagic conversations.

Summary

Putting all these developments together, the last few years has seen several breakthrough AI technologies appear that, for the very first time, make it possible to create engaging, compelling, and effective sales training conversations at scale.

SalesMagic is founded on these modern transformational technologies to help you improve your sales technique. Try it out today to see how far we've come from chatbots that don't really hear you, to virtual prospects that can actually augment and improve your sales training efforts.

SalesMagic. Where practice makes profit. Rewrite your sales story and experience the pinnacle of sales performance simulations and training.

#SalesEnablement #SalesTrends #FutureOfSales #SalesExcellence #SalesTraining #QBR #SalesPredictability